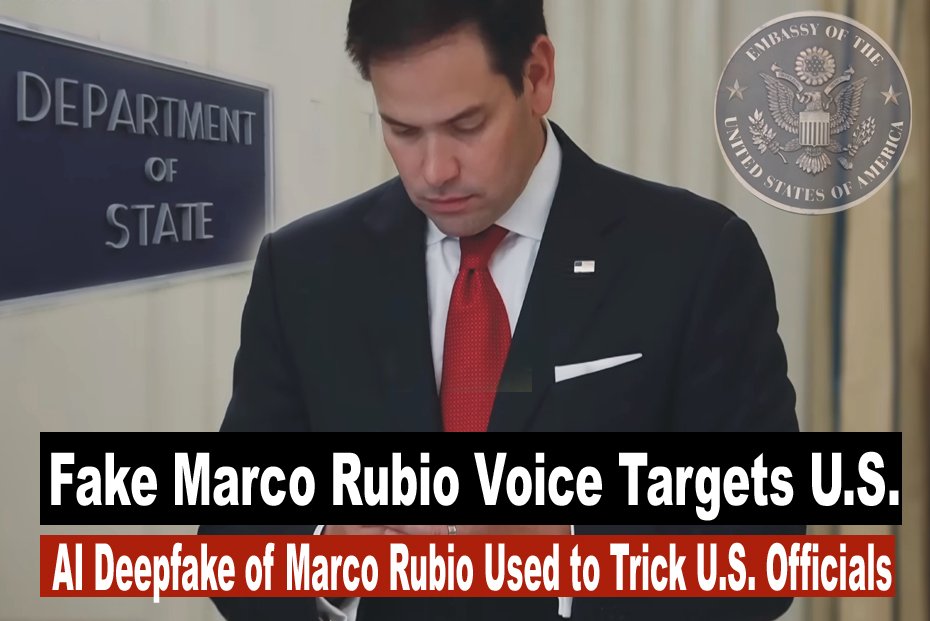

AI Deepfake of Marco Rubio Used to Trick U.S. Officials — Cybersecurity on High Alert

AI Impersonation Sparks Alarm: Fake Marco Rubio Voice Used to Target Senior Officials

In a startling revelation, a recent case of AI-generated impersonation involving U.S. Secretary of State Marco Rubio has raised serious concerns about cybersecurity, political disinformation, and the emerging threat of artificial intelligence in digital fraud. Reports indicate that an individual used artificial intelligence to generate a fake

voice resembling Marco Rubio and sent out audio messages and text communications pretending to be him. These deceptive messages reportedly reached at least five high-ranking government officials, causing confusion and raising serious concerns about security at the top levels of leadership.

A Deepfake in High Places

The fraudster, using advanced AI voice cloning tools, was able to replicate Marco Rubio’s voice with alarming accuracy. The AI-generated voice recordings were reportedly used to communicate with high-ranking government officials through voice messages and follow-up texts. While the identity and motivations of the impersonator remain unclear, the fact that such sophisticated deception reached senior leadership has sparked widespread concern.

This incident is not only a wake-up call for national security agencies, but it also underscores the rapid advancement of AI tools that are now capable of mimicking human voices and personas with near-perfect precision.

How the Scam Unfolded

Though detailed investigations are ongoing, initial reports suggest that the scammer first targeted the officials with pre-recorded voice messages that sounded indistinguishably like Marco Rubio. These messages contained requests and instructions that appeared legitimate at first glance (or rather, first listen). Follow-up text messages were also sent to reinforce the illusion of authenticity.

In some cases, the impersonator requested sensitive information or attempted to establish phone conversations, possibly to gather intelligence, sway decisions, or create political miscommunication.

The Role of AI in Voice Cloning

Voice cloning technology has advanced significantly in recent years, largely due to developments in machine learning, neural networks, and generative AI models. Today, anyone with access to basic AI tools and a few minutes of someone’s recorded speech can create a believable voice clone.

Platforms offering AI-generated voices are now widespread, and while many are used for legitimate purposes like audiobooks, virtual assistants, or entertainment, bad actors are exploiting these tools for malicious purposes such as scams, impersonation, and disinformation campaigns.

The situation surrounding Marco Rubio has become a clear reminder of how artificial intelligence can be used to target even the most powerful figures in government. It highlights growing concerns about how technology can be turned against those responsible for national and global decision-making.

Growing Cybersecurity Dangers and the Risk of Misinformation

According to cybersecurity experts, this case reveals a serious vulnerability that could have far-reaching consequences. It shows how AI tools can be misused to impersonate trusted voices, causing confusion, spreading false information, and potentially interfering with official processes.

When senior officials are tricked by fake messages that seem to come from trusted figures, it opens the door to serious consequences—including diplomatic confusion, strategic manipulation, and the spread of false narratives.

The growing trend of “deepfake diplomacy,” where AI-generated content is deliberately used to create tension or disrupt political stability, could become increasingly common unless effective protections and regulations are established.

This incident serves as a chilling preview of how AI can be weaponized in both domestic and international contexts.

Moreover, AI voice impersonation can be combined with phishing, social engineering, and data breaches to create multi-layered attacks that are harder to detect and prevent.

Legal and Ethical Implications

Currently, there is a lack of comprehensive legislation governing the use of AI for voice cloning and deepfakes in many countries, including the United States. While some states have begun introducing laws to restrict AI impersonation, the technology is evolving much faster than the legal framework can adapt.

If the individual behind the impersonation is eventually identified, bringing them to justice may still be challenging unless existing laws specifically address such acts. The lack of clear legal guidelines creates a loophole that cybercriminals can exploit, highlighting the pressing need for updated rules around digital identity, accountability, and AI misuse.

Steps Toward a Solution

To address this growing threat, experts and officials are calling for:

Stronger AI regulations – Governments need to pass clear laws around AI-generated content, especially when used for impersonation and fraud.

Authentication protocols – High-level communications should include identity verification systems that go beyond just voice or text recognition.

Public awareness – Citizens and officials alike must be educated on the risks of AI impersonation and trained to recognize potential red flags.

Tech industry responsibility – Developers and platforms offering AI tools should implement safeguards to prevent misuse, such as watermarking synthetic content or requiring verification for access.

Cybersecurity investment – More resources should be directed toward building defenses against deepfakes, including real-time detection tools and anomaly detection systems.

A Warning for the Future

The impersonation of Marco Rubio is more than just a technical prank—it is a sign of the disruptive power AI holds when placed in the wrong hands. As synthetic voices and deepfakes become more convincing, the line between real and fake will continue to blur, making it increasingly difficult to trust what we see and hear online.

Governments, companies, and citizens must act swiftly and collaboratively to develop the tools, policies, and awareness needed to navigate this new AI-powered landscape safely.

This incident should serve as a catalyst for global conversation on AI ethics, digital identity, and cybersecurity before the next voice you hear—real or fake—leads to consequences far more dangerous than confusion.

Pingback: Tesla Cars Get A Brain: Grok 4 AI Integration Begins Next Week